imtmphoto - Fotolia

Pair AWS with microservices deployment to meet CI/CD goals

When combined with microservices, these AWS offerings can provide an easier way to adopt a fully Agile development methodology.

By combining microservices architecture and AWS tools, developers can fully embrace the CI/CD lifestyle without the need to host their own build and deployment system.

Every development team can take advantage of tools, such as CodePipeline and CodeBuild, to ease the use of fully Agile development methodologies. Here are some ways to configure your microservices for increased automation in AWS and use tools that will help with essential tasks, like messaging and load balancing.

Why microservices help

A microservices deployment strategy should meet two priority goals. The first is to help reduce the coupling of unrelated parts. The second is to improve scalability and speed up the rate at which developers can release new features. When developers separate each microservice, they can update one specific feature without impacting others.

Since microservices are always part of a larger application, they need to connect to produce a full service. For example, individual microservices that manage authorization, play videos and recommend new videos, all can combine into one API. These different microservices are simple individually, but they become the core of an application when combined. And from an API standpoint, it makes sense to serve up all of these microservices from the same API host. As such, you'll need to connect them all into one service.

While open source options, including Kong or Nginx, support proxy HTTP requests to a microservice or function-as-a-service endpoint, AWS also has some built-in tools that might work better, depending on where you host your code. Amazon API Gateway, for example, is the go-to location to transform an HTTP request into a Lambda invocation.

Amazon API Gateway

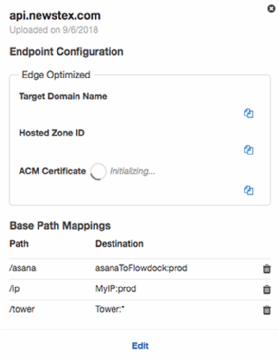

For microservices that span multiple API gateways, Amazon API Gateway provides custom domains. You can configure custom domains to map multiple API gateways by a base resource path, and you can even serve up both a custom Secure Sockets Layer certificate and a custom domain. For example, in the following setup, the domain api.newstex.com is set up to proxy requests to three different microservices as shown to the left.

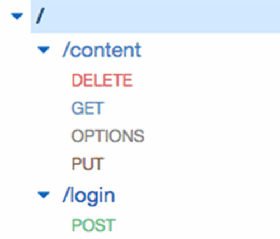

Requests to /asana go to the asanaToFlowdock API gateway. Requests to /ip go to the MyIP API gateway, and requests to /tower go to the Tower API gateway. Each of these API gateways consists of several microservices and Lambda functions that manage their own tasks. For example, the Tower service consists of a few APIs that surround the /content resource and one that surrounds the /login resource (see image below).

The /content resource consists of three separate microservices or Lambda functions, as well as an OPTIONS API that handles cross-origin resource sharing (CORS) compatibility. An API gateway, created by the Enable CORS action, manages the OPTIONS API. Each of the DELETE, GET and PUT methods executes a different Lambda function. For example, you can use the Lambda pass-through method to convert the input from Amazon API Gateway's format into the Lambda proxy format.

Application load balancers and network load balancers

For microservices that don't run on AWS Lambda, developers can use AWS load balancers. There are currently two types of load balancers: network load balancers and application load balancers. An application load balancer is optimal for microservices deployment since it operates on a per-request basis.

Network load balancers are useful for services that operate on protocols other than HTTP/HTTPS, as they map an incoming connection at the network level and make no assumptions about the protocol in use. This is useful for services that host things like FTP or virtual private network connections. However, it's better to use the application load balancer for web APIs.

Application load balancers are relatively new to AWS managed services and let developers configure multiple microservices to listen on the same HTTP port. With an application load balancer, developers can set up a /content URL to hit one Docker container and then a /login URL to query against a different Docker container. You can also configure these load balancers to monitor the health of each service so that it will still serve up the /content URL even if the /login service goes down on an Elastic Compute Cloud instance. This monitoring prevents the entire instance from terminating when one of the microservices fails.

Application load balancers are the non-Lambda equivalent of an API gateway, and they can route traffic to either instances or IP addresses. This means you can still add microservices that are not on AWS to an application load balancer, which is a great option for anyone who migrates an external microservices architecture into AWS. This approach helps you migrate each microservice individually, while you maintain the overall service that's hosted out of a single domain name.

Continuous deployment for microservices with AWS

Another great thing about microservices deployment is that it makes CI/CD less dangerous. When you deploy a change to just one small part of the system, it's less likely to break the user experience. AWS has options for this, too, such as CodeBuild, CodePipeline and CodeCommit.

For developers who push private code, AWS CodeCommit offers a hosted Git repository that can synchronize changes with other developers. As a part of AWS, CodeCommit integrates with both CodePipeline and CodeBuild. CodePipeline can automatically trigger builds with CodeBuild based on specific situations, such as a push to a particular branch, and can spin off a CodeBuild job to automatically build, test and deploy a microservice to Simple Storage Service, Elastic Block Store or AWS Lambda.

You can also configure CodePipeline to require optional manual steps, such as a manual approval of a build, before it's pushed out to production. You can also specify these optional manual steps so that builds to testing and staging go through without a manual review, but certain production builds require a senior developer's sign-off before going live. Additionally, since AWS Lambda and Amazon API Gateway both support canary-style deployments, production builds could deploy to just half of the requests, wait for approval and then continue to update the remainder of the requests.